Abstract

This thesis collects the outcomes of a Ph.D. course in Electronics, Telecommunications, and Information Technologies Engineering and it is focused on the study and design of techniques able to optimize resources in distributed mobile platforms.

It is related to a typical smart city environment, to enhance quality, performance and interactivity of urban services.

The subject is the operation of computation offloading, intended as the delegation of certain computing tasks to an external platform, such as a cloud or a cluster of devices. Offloading the computation tasks can effectively expand the usability of mobile devices beyond their physical limits and may be necessary due to limitations of a system handling a particular task on its own.

The computation offloading within an ecosystem as a urban community, where a large amount of users are connected towards even multiple devices, is a challenging subject. In a very close future, smart cities will be peculiar sources of intensive computing tasks, since they are conceived as systems where e-governance will be not only transparent and fast, but also oriented to energy and water conservation, efficient waste disposal, city automation, seamless facilities to travel and affordable access to health management systems. Also traffic will need to be monitored intelligently, emergencies foreseen and resolved quickly, homes and citizens provided with a wide series of control and security devices. All these ambitious aspirations will require the deployment of infrastructures and systems where devices will generate massive data and should be orchestrated in a collective way, to pursue synergic goals. In this context, the computation offloading is an operation dealing with the optimization of urban services, to reduce costs and consumption of resources and to improve contact between citizens and government.

This dissertation is organized in three main parts, dealing with the optimization of the resources in a smart city background from diffeerent points of view.

The first part introduces the Urban Mobile Cloud Computing (UMCC) framework, a system model that takes into account a series of features related to Heterogeneous Networks (HetNets), cloud architectures, various characteristics of the Smart Mobile Devices (SMDs) and different types of smart city application, performed to pursue several goals.

The second part deals with a partial offoading operation, considering to delegate only a portion of the computation load to a cloud infrastructure. It is focused on the tradeoff between energy consumption and execution time, in a non-trivial multi-objective optimization approach. Furthermore, a utility function model developed from the economic field is introduced. It takes into account a series of parameters related to the UMCC, showing that, when the network is overloaded, the partial offoading permits to achieve the target throughput values with an energy consumption and a computational time reduced, if compared with the total offoading of the computation tasks. In addition, the proposed UMCC framework and the partial computation offoading are applied to a vehicular environment for handling a real-time navigation application, so that the SMDs can exploit road side units and other neighbor devices forming clusters for delegating a shared application. It is shown that the clusterization allows to reduce the consumed energy in case of high traffic scenarios, optimizing the cluster size for different populations size and various offoading policies.

Finally, in the third part, the problem of Cell Association (CA) in a UMCC framework deals with the system as a community, thinking about to improve the collective performance and not only the achievement of a single device. A probabilistic algorithm that uses biased-randomization techniques is proposed as an efficient alternative to exact methods, which require unacceptable levels of computational time to solve real life instances. This probabilistic algorithm is able to provide near-optimal solutions in real time, thus outperforming by far the solutions provided by existing greedy heuristics. Since this algorithm takes into account all users in the assignment process, it avoids the selfish or myopic behavior of the greedy heuristic and, at the same time, is able to quickly find near-optimal solutions for allocation of the avaiable resources.

Introduction

According to the flagship publication of the United Nations World Urbanization Prospect, more than one half of the world population is living nowadays in urban areas, and about 70% will be city dwellers by 2050. Furthermore, the world population is estimated to increase in the second half of the 21st century, while the urban areas are expected to absorb all the predicted growth and to draw in some of the rural population. The United Nations report predicts that, by mid-century, there will be 27 megacities, with at least 10 million population, while at least half of the urban growth in the coming decades will occur in small cities with less than 500,000 people, envisioning therefore that cities, big or small, are becoming a determining shift in the organization of human society. Cities and megacities are predicted to magnify problems such as diffculty in waste management, scarcity of resources, air pollution, human health concerns, traffic congestion, and inadequate, deteriorating and aging infrastructures.

Concurrently with such urbanization effect, an extraordinary phenomenon concerning the Information and Communication Technology (ICT) is happening: smart mobile devices are becoming an essential part of human life and the most effective and convenient communication tools, not bounded in time and place. According to the Cisco Visual Networking Index, the number of mobile-connected devices has already overtaken the number of people in the world, and by 2018 it will be over 10 billion, including Machine to Machine (M2M) modules. Overall mobile data traffic is expected to have nearly an 11-fold increase in the next five years.

Urbanization tendency and smart mobile expansion are going to reach a relevant convergence point through the concept of smart city, an icon of a sustainable and livable city, projecting the ubiquitous and pervasive computing paradigms to urban spaces, focusing on developing city network infrastructures, optimizing traffic and transportation flows, lowering energy consumption and offering innovative services. It is through ICT that smart cities are truly turning smart [1], in particular by means of the exploitation of smart mobile devices, forming together with cloud computing the Mobile Cloud Computing (MCC), since, as suggested by Michael Batty, to understand cities we must view them not simply as places in space but as systems of networks and flows [2].

Original Contribution

In this dissertation, innovative techniques and methodologies aimed to enhance the performance of MCC applied to a smart city are proposed and investigated.

In particular the UMCC, a global framework that can be adapted depending on the optimization objectives introduced, highlighting the features that can affect the QoS of various types of smart city related applications.

Furthermore, various optimization techniques, based on opportunely defined cost or utility functions, are presented and inspected for the optimization of the resources in the UMCC.

Specifically:

- a partial offloading tecnique is determined for optimizing time and energy consumption in a smart city HetNets scenario, where smart mobile devices are supposed to perform a distributed application;

- a utility function model derived from the economic world has been presented, aiming to measure the QoS, in order to choose the best access point in a HetNet for offloading part of an application on the HetNets;

- a cluster-based optimization technique is proposed and utilized in a distributed computing resource allocation, exploiting resource sharing, in high density SMD environments.

During my Ph.D. course I had the opportunity to collaborate with the Internet Interdisciplinary Institute (IN3) at the Open University of Catalonia (UOC), where I contributed to develop new optimization heuristics for improving heterogeneous communication systems.

The applied approach is based on the use of biased randomization techniques, which have been used in the past to solve similar combinatorial optimization problems in the fields of logistics, transportation, and production.

This work extends their use to the field of smart cities and mobile telecommunications. Some numerical experiments contribute to illustrate the potential of the proposed approach. The outcomes of this research stay are described in Chapter 5.

Personal Publications

[p1] D. Mazza, D. Tarchi, and G. E. Corazza, "A Unified Urban Mobile Cloud Computing Offloading Mechanism for Smart Cities" Communication Magazine, IEEE (submitted) Go Back

[p2] available here - D. Mazza, A. Pages, D. Tarchi, A. Juan, and G. E. Corazza, "Supporting Mobile Cloud Computing in Smart Cities via Randomized Algorithms," Systems Journal, IEEE, DOI:10.1109/JSYST.2016.2578358. Go Back

[p3] available here - D. Mazza, D. Tarchi, and G. Corazza, "A cluster based computation offlooading technique for mobile cloud computing in a smart city scenario," in Proc. of IEEE Conference on Communication (ICC) 2016, Kuala Lumpur, Malaysia. Go Back

[p4] available here - D. Mazza, D. Tarchi, and G. E. Corazza, "A user-satisfaction based offloading technique for smart city applications," in Proc. of IEEE Globecom 2014, Austin, TX, USA, Dec. 2014. Go Back

[p5] available here - D. Mazza, D. Tarchi, and G. E. Corazza, "A partial offloading technique for wireless mobile cloud computing in smart cities," in Proc. of 2014 European Conference on Networks and Communications (EuCNC), Bologna, Italy, Jun. 2014. Go Back

Acknowledgments

This Ph.D. dissertation was carried out during three years of my life in which not only I have gained knowledge and accomplished an academic path, but also I have had the opportunity to proof and enrich my personality. I would like to thank Prof. Giovanni Emanuele Corazza and Prof. Daniele Tarchi for their precious advice and support during my Ph.D. studies. They helped me during every step of my work with unmatched competence and patience.

A sincere thanks goes to Prof. Ángel Alejandro Juan Pérez and Prof. Adela Páges Bernaus for giving me the chance to work with them at the DPCS IN3 Lab of the Open University of Catalonia: for their collaboration and for being always available to share projects and ideas and because my stay in Barcelona has been a unique experience for me, both professionally and personally.

I am also deeply grateful to Prof. Alessandro Vanelli Coralli, Coordinator of the Ph.D. Program in Electronics, Telecommunications, and Information Technologies, for making it possible to carry out this work.

A special acknowledgment goes also to all my colleagues that shared with me many important moments during my time at the University of Bologna - Roberta, Sergio, Vahid, Vincenzo - and at the Internet Interdisciplinary Institute IN3 in Barcelona - Aljoscha, Carlos, Jesica, Laura, Helena.

I also want to thank the reviewers of this thesis, Prof. Flavio Bonomi and Prof. Mohsen Guizani, for their many suggestions and help for greatly improving the quality of this work.

Infine, il piú grande ringraziamento va alla mia famiglia. Grazie a Gabriele, mio compagno di vita, per aver condiviso la mia esperienza e per avermi supportato e incoraggiato, ai miei genitori, per avermi infuso l'amore per lo studio e la ricerca, e a mio figlio Alessandro, che sempre mi ha dato la forza per continuare a dedicarmi alla ricerca.

Part I - Urban Mobile Cloud Computing: a framework at the service of smart cities

Innovative projects of smart cities, aiming to make effective a vision where cities can use technology to meet sustainability goals, boost local economies, and improve urban services, have been adopted in the political agenda of many governments as a primary program, in a large number of developed and developing countries. This development is in line with the evolutionary trends in the Information Society [3].

The ever-growing demand for services from citizens and institutions, intending to make the cities smarter and improve the quality of life of the communities, has given a great boost to the conception of diverse wireless communication systems and has extended the envision of cloud architectures for providing infrastructures (IaaS), platforms (PaaS), and software (SaaS) [4], [5], offering computation, storage and network and going towards the integration with novel opportunistic communications as fog networking [6], [7], [8].

In order to interact with city services, MCC and wireless HetNets contribute in different and sinergic way for handling this smart city scenario, allowing ubiquitous and pervasive computing in a framework we called UMCC.

In this first part of the dissertation, the proposed UMCC system model is described and investigated. It takes into account a series of features related to HetNet's nodes, cloud architectures, SMDs' characteristics, in association with several types of application and goals - mobility, healthcare, energy and waste management, and so on. It can be employed in the optimization of the QoS's requirements related to the needs of citizens.

Smart cities applications are gaining an increasing interest among administrations, citizens and technologists for their suitability in managing the everyday life. One of the major challenges is managing in an efficient way the presence of multiple applications in this UMCC framework, in a Wireless HetNet environment, alongside the presence of a MCC infrastructure.

The content of the following chapter was extracted from publications [P1], [P2] and [P3].

Chap. 1. The Urban Mobile Cloud Computing (UMCC) Architecture

1.1 Introduction to the UMCC in a smart city scenario

The increasing urbanization level of the world population has driven the development of technology toward the definition of a smart city geographic system, conceived as a wide area characterized by the presence of a multitude of smart devices, sensors and processing nodes aiming to distribute intelligence into the city; moreover, the pervasiveness of wireless technologies has led to the presence of heterogeneous networks operating simultaneously in the same city area. One of the main challenges in this context is to provide solutions able to optimize jointly the activities of data transfer, exploiting the heterogeneous networks, and data processing, by using different types of devices. In this chapter, the UMCC framework is introduced, considering a mobile cloud computing model that describes the flows of data and operations taking place in the smart city scenario.

The challenges and the opportunities of exploiting the UMCC are discussed in relation to smart city solutions, highlighting the features that can affect the QoS of various types of smart city related services.

The UMCC sprang from the MCC, that is gaining an increasing interest in the recent years, due to the possibility of exploiting both cloud computing and mobile devices for enabling a distributed cloud infrastructure [9].

Considering the peculiarity of the MCC, we can observe that, on one hand, the cloud computing idea has been introduced as a mean for allowing a remote computation, storage and management of information, and, on the other hand, the mobility skill allows to gain by the most modern smart devices and broadband connections for creating a distributed and flexible virtual environment.

At the same time, the recent advances in the wireless technologies are defining a novel pervasive scenario where several heterogeneous wireless networks interact among them, giving the users the ability to select the best network among those present in a certain area.

As a consequence, the development of the UMCC is introduced, gaining from both computing and wireless communication technologies. It is a challenging opportunity for the creation of smart city infrastructures, providing solutions fulfilling the urgent need for richer application and services, requested from citizens that, as mobile users, are facing many demanding tasks in relation to mobile device resources as battery life, storage and bandwidth.

The triple role of Smart Mobile Devices

By analyzing the technology systems underlying a smart city framework, mobile devices can be considered in a three-fold way, as illustrated in fig.1:

- Sensors

They can acquire different types of data regarding the users and the environment, transmitting a large amount of information to the cloud in real time, by means of wireless communication systems. This is the underlying concept of IoT, profitably exploited to improve urban life, for instance for extracting descriptive and predictive models in the urban context of cities [10]. As well as the expansion of Internet-connected automation into a plethora of new application areas, IoT is also expected to generate large amounts of data from diverse locations, with the consequent necessity for quick aggregation of the data, and an increase in the need to index, store, and process such data more effectively. - Nodes:

They can form distributed mobile clouds where the neighboring mobile devices are merged for resource sharing, becoming integral part of the network. Furthermore, they can form VCNs offering content routing, security, privacy, monitoring, virtualization services [11], easy to be used for providing smart city services and applications, in particular for traffic and mobility control. This is the crucial concept of fog networking, where a collaborative multitude of users carry out a substantial amount of storage, communication, and data management in a collaborative way. - Outputs:

They can make the citizens aware of results and able to decide consequently, or become actuators without need of human intervention. This is the concept underlying M2M communications where computers, embedded processors, smart sensors, actuators and mobile devices acquire information and act in an autonomous way [12].

To perform this triple role, mobile devices have to become part of an infrastructure that is constituted by different cloud topologies and, at the same time, have to exploit heterogeneous wireless link technologies, allowing to address the different requirements of a smart city scenario. This infrastructure starts from the concept of MCC, where the cloud works as a powerful complement to resource-constrained mobile devices.

The vision of MCC has increasingly become a source of interest, beginning from the early 2000s, when Amazon realized that a huge amount of space on their premises was underused. This awareness pushed toward the implementation of remote services, gaining by the presence of storage space and computing power and creating a cloud system. Alongside with the expansion of wireless technologies, the cloud computing has been integrated through a broadband system, exploiting the opportunity of working in mobility. The SMDs, then, can use MCC devolving demanding tasks and referring to it for data storage.

Computation Offloading

The strategy allowing to delegate to one or more cloud computing elements storage and computing functions is commonly called cyberforaging or computation offloading. It allows to tackle with the limited battery power and computation capacity of the SMDs, and plays a key role in a smart environment where wireless communication is of utmost relevance, particularly in mobility and traffic control domains [13]. If the storage is one of the most common and legacy activities that can be delegated to a remote cloud infrastructure, recently, thanks to modern programming paradigms, it is possible to allot even only a part of the computation load to a remote unit. This allows users to optimize the system performance by offloading only a fraction of the application to be computed, or distributing the application among different cloud structures. Offloading is an effective network congestion reduction strategy to solve the overload issue compared to scaling and optimization [14]. It enables network operators to reduce the congestion in the cellular networks, while for the end-user it provides cost savings on data services and higher bandwidth availability.

1.2 Cloud Topologies

In relation to the SMD's roles previous described, we take into account various cloud topologies. This is a different categorization with respect to the common taxonomy used for cloud computing - SaaS, PaaS and IaaS. It looks on the different interaction among the nodes that form the cloud, instead of the services provided by the cloud itself, so we can distinguish among centralized cloud, cloudlet, distributed mobile cloud and a combination of all, as shown in fig 2.

Centralized Cloud

A centralized cloud provides the citizens to interact remotely, e.g., for accessing to open data delivered by the public administrations. It refers to the presence of a remote cloud computing infrastructure having a huge amount of storage space and computing power, virtually infinite, offering the major advantage of the elasticity of resource provisioning. The centralized cloud infrastructure is often used for delivering the computing processes to remote clusters, owing a higher computing power, and/or for storing big amount of data. The centralized cloud allows to reduce the computing time by exploiting powerful processing units, but it could suffer from the distribution latency, due to the data transfer from the users to the cloud and vice versa, the congestion, due to the multiple users exploitations, and the resiliency, due to the presence of a single performing infrastructure leading to the SPOF issue.

Cloudlet

One of the main drawback of the centralized cloud is the great distance between the mobile devices, requesting services, and the clusters, performing computation in the cloud. Even if the SPOF issue is often resolved by implementing mirroring or redundancy solutions, the big distance that may occur between users and centralized clouds can be better addressed by means of the introduction of cloudlets, representing small clouds installed in proximity of the users. Furthermore, the inclusion of cloudlets allows a most appropriate sizing depending on the number of contemporary requests of the users.

Cloudlets are fixed small cloud infrastructures installed between the mobile devices and the centralized cloud, limiting their exploitation to the users in a specific area. Their introduction allows to decrease the latency of the access to cloud services by reducing the transfer distance at the cost of using smaller and less powerful cloud devices.

Distributed Mobile Cloud

A third configuration can address the issue of non persistent connectivity, whereas both the previous concepts must assume a durable state of connection. In a distributed mobile cloud the neighboring mobile devices are pooled together for resource sharing [15]. An application from a mobile device can be either processed in a distributed and collaborative fashion on all the mobile devices or handled by a particular mobile device that acts as a server.

The possibility of implementing a distributed mobile cloud infrastructure has become a reality since the introduction of smarter and powerful mobile devices, e.g., smartphones, tablet, phablet, having the ability, even if limited, of computing and storaging. Moreover, it has to be noted that their number is still increasing, leading to a pervasive presence and allowing to form a cloud of pervasive distributed devices that can interact among them. This fog network architecture uses one or a collaborative multitude of end-user clients or near-user edge devices to carry out a substantial amount of storage (rather than stored in centralized clouds), communication, and control, configuration, measurement and management [7], [8]. It can be seen as the fog layer that encapsulates phisical objects - equipped with computing, storage, networking, sensing, and/or actuating resources - and constitutes a piece of a wider CARS architecture [16], a geographically distributed platform that connects many billions of sensors and things, and provides multitier layers of abstraction of sensors and sensor networks, enabling the SenaaS.

Combination of different topologies

The proposed framework foresees the joint exploitation of the three aforementioned topologies. As outlined before, they are characterized by different features, leading to a different usage depending on the scenario. Hence, a joint exploitation could steer to a more efficient usage aiming to achieve the performance goals of a certain application. As it will be better specified below, a smart city scenario is characterized by the presence of a lot of different applications, each one with different characteristics and requirements. An integrated UMCC framework composed by centralized clouds, cloudlets and distributed mobile clouds, as shown in fig 2, allows to respect the application requirements with regard to other solution in a more efficient way.

1.3 Types of RATs

In order to connect the devices, different types of RATs should be taken into consideration, providing a pervasive wireless coverage.

Multiple RATs, such as IEEE 802.11, mobile WiMAX, HSPA+, LTE and WiFi, have to be integrated to form a HetNet. For enhancing the network capacity, generally there is an increasing interest in deploying relays, distributed antennas and small cellular base stations - picocells, femtocells, etc - indoors in residential homes and offices as well as outdoors in amusement parks and busy intersections. These new network deployments, comprised of a mix of low-power nodes underlying the conventional homogeneous macrocell network, by deploying additional small cells within the local-area range and bringing the network closer to users, can significantly boost the overall network capacity through a better spatial resource reuse. Inspired by the attractive features and potential advantages of HetNets, their development have gained much momentum in the wireless industry and research communities during the past few years. The heterogeneous elements are distinguished by their transmit powers/coverage areas, physical size, backhaul, and propagation characteristics.

We can basically distinguish between two components, i.e., macrocells and small cells, where the former provide mobility while the latter boost coverage and capacity.

Macrocells

The distance between the access points (base stations of the macrocells) is usually higher than 500~m. Thanks to this type of base stations the environment is completely covered and the devices can move by minimizing the handover frequency. On the other hand, in macrocells the system suffers for channel fading and traffic congestion. This leads to a lack of stability, not allowing to reach very high data rate. The technology used for this type of cells refers to the cellular networks, e.g. 3G and LTE.

Small Cells

Small cells are characterized by low power radio access nodes, which have a cover range of about 100-200~m or less. We can distinguish between picocells, for providing hotspot coverage in public places - malls, airports and stadiums - without limits in terms of number of connected devices, and femtocells, for covering a home or small business area, available only for selected devices. Picocells and femtocells have been recently introduced as a way for increasing the coverage and maximize the resource allocation in LTE networks. We also consider WiFi access points as nodes with a small cover range (less than 100 m) which can typically communicate with a small number of client devices. However, the actual range of communication can vary significantly, depending on such variables as indoor or outdoor placement, the current weather, operating radio frequency, and the power output of devices.

1.4 Challenges of the UMCC

The UMCC approach foresees the definition of a scenario where smart city applications can exploit jointly the three topologies, as shown in fig.3, by distributing and performing among the different parts composing the framework. The application requested by a particular SMD, signed as the RSMD, is partitioned and distributed among the different clouds using the available RATs. In the example of fig.3, the application is divided among the centralized cloud, two cloudlets, and a distributed cloud formed by five devices. Furthermore, part of the application can be computed locally by the RSMD itself.

The main issue is that, for transferring data from the requesting mobile device to the selected cloud topology, a certain time is required. This mostly depends on some communication parameters of the selected RAT, such as the end-to-end throughput, the amount of users, the QoS management of a certain transmission technology between the user device and each type of cloud processing unit. Furthermore, in terms of energy consumption, it should be taken into account the tradeoff between the energy saved in offloading part of the application to the cloud and the energy spent in sending the data.

Hence, when a RSMD needs to select the clouds infrastructures to be used for computing the smart city application, two main elements have to be taken into account:

- the processing and storage devices - smart mobiles, per se or together forming distributed mobile clouds, and cloud servers, constituting the cloudlets and the centralized cloud;

- the wireless transmission equipments, - different RAT nodes entailing diverse transmission speeds in relation to their own channel capacity and to the number of linked devices.

In fig.3, the UMCC framework is sketched by representing the functional flows of the architecture. Whenever a smart city application should be performed, the citizen within the UMCC can select among different MCC infrastructures, i.e., centralized clouds, cloudlets, and distributed mobile clouds, aiming to respect the requirements of the specific application depending on their features. The distribution depends on the application requirements, and the UMCC features; its optimization will be discussed in the Section 1.7.

Computation, storage, and tranmission features

The features of the selected processing and storage devices, considered per se or in a group forming cloud/cloudlets, are:

- Processing Speed: The processing speed corresponds to the performance speed of a device or a group of devices for processing the applications;

- Storage Capacity: The storage capacity corresponds to the amount of storage space provided by a device or a group of devices.

In the same time, the features of the transmission equipments to be taken into account are:

- Channel Capacity: The nominal bandwidth of a certain communication technology that can be accessed by a certain device;

- Priority/QoS management: The ability of a certain communication technology to manage different QoS and/or priority levels;

- Communication interfaces: The number of communication interfaces of each device, that impacts on the possibility of selection among the available heterogeneous networks.

1.5 The UMCC model

In this paragraph we focus on the different entities playing a role in the UMCC framework, describing the functions and the interactions among them. First of all, we are focusing on an application ${App}$ requested by a RSMD, defined through the number of operation to be executed, $O$, the amount of data to be exchanged, $D$, and the amount of data to be stored, $S$. An application can be seen as a smart city service, that can be executed either locally or remotely by exploiting the cloud infrastructures. Furthermore, each application has many requirements regarding the levels of QoS. We have taken into account the following:

- the maximum accepted latency $T_\textit{app}$, intended as the interval between the request of performing an application and the acquisition of its results by the RSMD,

- the minimum level of energy consumption $E_\textit{app}$, that the RSMD necessarily uses for performing the application itself,

- the throughput $\eta_\textit{app}$, intended as the minimum bandwidth that the application needs for being performed.

Hence, for highlighting the $\textit{App}$ dependence from the above measures, we can write:

\begin{equation} App = App(O,D,S,T_\textit{app},E_\textit{app},\eta_\textit{app}) \tag{1.1} \end{equation}A foundamental entity acting in the system is the RSMD requesting the $\textit{App}$, we named $\textit{Dev}$, characterized by certain features that are involved in the offloading operation: the power to compute applications locally, $P_l$, the power used for transferring data towards clouds, $P_{\textit{tr}}$, the power for idling during the computation in the cloud, $P_{\textit{id}}$, the computing speed to perform locally the computation, $f_{\textit{l}}$, and the storage availability, $H_{\textit{l}}$. Furthermore, also the time-varying position of the device plays an important role in the system interactions. Hence, we can write:

\begin{equation} Dev = Dev(P_l, P_{\textit{tr}}, P_{\textit{id}}, f_{\textit{l}}, H_{l}, pos_{\textit{dev}}(x, y)) \tag{1.2} \label{eq:Dev} \end{equation}Focusing on the different types of cloud entities in our scenario, we considered a unique centralized cloud $C_{\textit{cc}}$ and various cloudlets $C_{\textit{cl}}$ characterized by their own computing speed to perform the computation, i.e., $f_\textit{cc}$ for the centralized cloud and $f_\textit{cl}$ for the cloudlets. Additionally, the storage availability $H_\textit{cl}$ of each cloudlet has to be taken in consideration, while the storage availability of the centralized cloud can be considered infinite, therefore not constraining in the interaction. Hence, we can write for the centralized cloud $C_{\textit{cc}}$:

\begin{equation} \label{eq:Ccc} C_{\textit{cc}} = C_{\textit{cc}}(f_{\textit{cc}}) \tag{1.3} \end{equation}and for each cloudlet, considering also the influence of the position $pos_\textit{cl}(x, y)$ of the in-built AP, the end-to-end throughput $\eta_{\textit{cl}}$ provided by the AP itself, the maximum number of devices that it is possible to connect at the same time $n_\textit{cl}$, and the range of action $ r_{\textit{cl}}$:

\begin{equation} \label{eq:Ccl} C_{\textit{cl}} = C_{\textit{cl}}(f_{\textit{cl}}, H_{\textit{cl}}, pos_{\textit{cl}}(x, y), \eta_{\textit{cl}}, n_\textit{cl}, r_{\textit{cl}}) \tag{1.4} \end{equation} We are considering the system from the point of view of a single RSMD requesting to run an application, while the set of the other SMDs constituting the distributed cloud are providing a service for supporting the RSMD. Thus, the distributed cloud is a set of generic entities $\textit{MD}s$, each characterized by its specific connectivity, computation and storage for the exchange of data, i.e. the computing speed $f_{\textit{MD}}$, the storage availability $H_{\textit{MD}}$, the position $pos_{\textit{MD}}(x, y)$, the throughput $\eta_{\textit{MD}}$, the number of devices that can be connected to each device$n_{\textit{MD}}$, and each range of action $r_{\textit{MD}}$. Thus, we can write for the generic device $\textit{MD}$: \begin{equation} \label{eq:SMD} \textit{MD} = \textit{MD}( f_{\textit{MD}}, H_{\textit{MD}}, pos_{\textit{MD}}(x, y), \eta_{\textit{MD}} , n_{\textit{MD}}, r_{\textit{MD}} ) \tag{1.5} \end{equation}While the connection to the cloudlets can be made only through the unique AP that can be considered built-in in each cloudlet itself, and the connection of the distributed cloud to the RSMD can be made directly, the nodes of the HetNet offer different choices to connect towards the centralized cloud. Thus, for each involved node $\textit{Nod}$ constituting the centralized cloud, specifying that $pos_\textit{Nod}(x,y)$ is the position of the node, $\eta_\textit{Nod}$ is the end-to-end throughput in bit per second between the user and the exploited node, $n_\textit{Nod}$ is the number of devices available to connect, and $r_\textit{Nod}$ is the range of availability of the node, we can write:

\begin{equation} \label{eq:Nod} \textit{Nod} = \textit{Nod}(pos_{\textit{Nod}}(x, y), \eta_{\text{Nod}} , n_{\text{Nod}}, r_{\text{Nod}}) \tag{1.6} \end{equation}The Tab 1.1 summarizes the entities and the characteristics above described.

They are in a certain relationship due to some physical and logical constraints derived from the following considerations.

First, for distributing the computation of the application among the different types of clouds, the system has to evaluate which HetNet nodes, cloudlets, and SMDs are available. The availability is realized if the RSMD is in the range of action of a particular HetNet node, cloudlet or SMD and if these entities are not busy, i.e., if the number of devices connected to an entity $n_\text{conn}$ is less than $n_\textit{Nod}$, $n_\textit{cl}$, or $n_\textit{MD}$, dependently from the type of entity.

Thus, there are $M$ available HetNet nodes $\textit{Nod}$ for offloading towards the centralized cloud, $N$ cloudlets $C_{\textit{cl}}$ and $K$ devices $\textit{MD}$, able to share the computation in the distributed cloud. After the detection of the available entities (which are a total of $1+M+N+K$, including the local node RSMD, which we consider for simplicity the node of index 0), the next step is to distribute, by means of all these entities, different percentages $\alpha_i$ of operations $O$, $\beta_i$ of data $D$, and $\gamma_i$ of memory $S$, to all the available nodes, cloudlets and devices, under the constraints:

\begin{equation} \label{eq:alpha} \sum_{i=0}^\textit{M+N+K} \alpha_i = 1 \tag{1.7} \end{equation}and

\begin{equation} \label{eq:beta} \sum_{i=1}^\textit{M+N+K} \beta_i = 1 \tag{1.8} \end{equation}Alongside the computing capacity, it is possible to define a constraint regarding the storage availability of the cloudlets and the SMDs by means of the following equations, considering infinite the storage availability of the centralized cloud:

\begin{equation} \label{eq:storage} \gamma_i S \le H_i \quad \quad \forall i \in \{0,1,...,i,..., \textit{N+K}\} \tag{1.9} \end{equation}that stands for an upper limit of remotely used storage of a certain $i$-th cloud infrastructure.

A constraint related to the application's requirements $\eta_\textit{app}$ involves the throughput of the entities designated for the offloading: the overall throughput offered by the selected devices should be higher than the minimum throughput requirement of a certain application:

\begin{equation} \label{eq:throughput} \sum_{i=1}^\textit{M+N+K} \eta_i \ge \eta_\textit{app} \tag{1.10} \end{equation}From the point of view of the RSMD, the energy spent for offloading the application can be written as the sum of the energy spent to perform locally a part of the task, plus the energy spent by the RSMD for the transmission of data to the clouds, plus the energy spent during the idle period when the computation is being offloaded. Hence, the restriction related to the requirement $E_\textit{app}$ leads to the following:

\begin{equation} \label{eq:energy} P_l \frac{\alpha_0 O}{f_l} + \sum_{i=1}^\textit{M+N+K}{P_\textit{tr}\frac{ \beta_i D}{\eta_i} + P_\textit{id} \operatorname*{arg\,max}_{i =1,\textit{M+N+K}}\left \{ \frac{\alpha_i O }{f_i} \right \}}\le{E_\textit{app}} \tag{1.11} \end{equation}In the same time, the total latency is the sum of the time for computing locally the $\alpha_0$ percentage of computation, plus the times to transmit/receive data to/from the other computation units, plus the maximum of the time to compute in offloading. Hence, the restriction related to the requirement $T_\textit{app}$ leads to the following:

\begin{equation} \label{eq:latency} T=t_l^{\text{comp}}+t_{cc}^{\text{tr}}+t_{cc}^{\text{comp}}+t_{cl_j}^{\text{tr}}+t_{cl}^{\text{comp}}+t_{dc_j}^{\text{tr}}+t_{dc}^{\text{comp}}\\ \alpha_0 \frac{O}{f_l}+ \sum_{i=1}^\textit{M+N+K} \frac{ \beta_i D}{\eta_i} + \operatorname*{arg\,max}_{i =1,\textit{M+N+K}}\left \{ \frac{\alpha_i O }{f_i} \right \}\le{T_\textit{app}} \tag{1.12} \end{equation}Furthermore, the throughput $\eta_i$ is related to the number of devices $n_i$ connected to the $i^{th}$ entity and the channel capacity $BW_i$ as shown by the following representing the Shannon Formula:

\begin{equation} \eta_i = \frac{\textit{BW}_i}{n_i}\cdot \log_2{\left(1+\frac{\textit{SNR}_i}{d_i^2}\right)} \tag{1.13} \label{eq:shannon1} \end{equation}where $\textit{SNR}_i$ is the SNR of the related link and $d_i$ the distance between the receiver and the transmitter. Thus, the optimization of the system consists in finding the values of $\alpha_i$, $\beta_i$ and $\gamma_i$ that satisfy eqs.\eqref{eq:alpha}-(\ref{eq:shannon1}). This is a nontrivial optimization problem, but the complexity can be decreased with the introduction of some simplifications, as presented in the following chapters.

1.6 Requirements of smart city applications

There are many taxonomies trying to define smart city key areas, where social aims, care for environment, and economic issues are related and interconnected. The European Research Cluster on the Internet of Things (IERC) has identified in [17] a list of applications in different domains of IoT, including the smart city domain, showing the utmost strategic technology trends for the next five years. Moreover, the Net!Works European Technology Platform for Communications Networks and Services has issued a white paper [18] aiming to identify the major topics of smart cities that will influence the ICT environment. Furthermore, a relevant document aiming to categorize and define the different applications has been released by European Telecommunications Standards Institute (ETSI), where several application types have been specified focusing on their bandwidth requirements [19].

Taking into account all the relevant observations presented in these essays, we analyzed some particular smart city applications covering the areas of mobility, healthcare, disaster recovery, energy, waste management and tourism, in order to leverage the UMCC identifying the requirements which are related to the QoS.

Each application is defined through the service provided to the citizens, concerning the requirements in terms of throughput, energy consumption, time due to the transferring and computation processes, and number of users. In addition, for every application, the typical requirements of processing, data to exchange and storage have been established. The following list summarizes the definition of these requirements:

- Latency: The latency is defined as the amount of time required by a certain application between the event happens and the event is acquired by the system;

- Energy Consumption: The energy consumption corresponds to the energy consumed for executing a certain application locally or remotely;

- Throughput: The throughput corresponds to the amount of bandwidth required by a specific application to be reliably installed in the smart city environment;

- Computing: The computing corresponds to the amount of computing process requested by a certain application;

- Exchanged data: The exchanged data correspond to the amount of input, output and code information to be transferred by means of the wireless network;

- Storage: The storage corresponds to the amount of storage space required for storing the sensed data and/or the processing application;

- Users: The users correspond to the number of users for achieving a reliable service.

The QoS is a function of the previous requirements, where each one of these plays a role less or more important depending on the aims of the application. In the following list the considered application types are described by highlighting their technological requirements and characteristics, while in Tab 1.2 the considered application types and the significance of their requirements are summarized.

| Application | latency | energy | throughput | computing | exchanged data | storage | users |

|---|---|---|---|---|---|---|---|

| Mobility | restrictive | variable | restrictive | high | high | variable | high |

| Healthcare | restrictive | non-restrictive | restrictive | high | high | high | low |

| Disaster Recovery | restrictive | restrictive | non-restrictive | high | high | high | variable |

| Energy | non-restrictive | non-restrictive | non-restrictive | high | high | high | high |

| Waste Management | non-restrictive | restrictive | non-restrictive | low | low | low | low |

| Tourism | non-restrictive | restrictive | non-restrictive | high | high | high | variable |

Mobility

All the components in an intelligent transportation system could be connected to improve transportation safety, relieve traffic congestion, reduce air pollution and enhance comfort of driving. The three-layered hierarchical cloud architecture for vehicular networks proposed by Yu et al. [20], where vehicles are mobile nodes that exploit cloud resources and services, can be considered as included in the UMCC framework. A real-time navigation with computation resource sharing, where the computation resources in the central cloud are utilized for data traffic mining, requests a minimal latency, since a ready response is needed. On the other hand, considering that the great part of mobile devices can be recharged directly from the car, energy consumption has to be considered only for pedestrians and bicycles. The necessary throughput, the computational load and the amount of data to exchange are high, whereas we can think the storage as a secondary requirement, unless for security recording.

Healthcare

Intelligent and connected medical devices, monitoring physical activity and providing efficient therapy management by using patients' personal devices, could be connected to medical archives and provide information for medical diagnosis. We considered in the UMCC framework the typical architecture proposed by He et al. [21], requiring a full integration of the clinical devices and efficient processing of the collected data, considering, for instance, a cloudlet nearby the home of the patient. In this case there are relatively low requirements regarding energy consumption, throughput and number of users, whereas the requirements of latency, computation, exchanged data and storage are high, considering the complexity of the management algorithm, the video monitoring for remote diagnosis and the data to store for the personal record archive.

Disaster Recovery

In [22] a disaster relief scenario is described, where people are facing with the destruction of the infrastructures and local citizens are asked to use their mobile phones to photograph the site. Also this case introduces the three-layered cloud described in the UMCC framework, requiring to transmit a lot of data using the cameras provided by the smartphones to reconstruct the disaster scene. In this case there are relatively low requirements regarding throughput, whereas it is important to have a quick response and to save the energy of the devices. There are a lot of computation to reconstruct the scene and a lot of data to exchange and to store. The number of users is variable.

Energy

Energy saving can take advantage from the cloud basically thanks to smart grid systems, aimed to transform the behavior of individuals and communities towards a more efficient and greener use of electric power. Data fusion and mining, as well as scheduling and optimization, are critical in order to include the use of wireless communications to collect and exchange information about electric quality, consumption, and pricing in a secure and reliable fashion. By analyzing the specific aspects regarding the UMCC, if we consider an application where vehicles are involved in a smart grid system, we can suppose that a big data exchange is needed, there is a lot of computation and storage between a large number of users, whereas there are relatively low requirements regarding latency and throughput, and energy saving is not a problem for the devices involved.

Waste Management

Automatically generated schedules and optimized routes which take into account an extensive set of parameters (future fill-level projections, truck availability, traffic information, road restrictions, etc.) could be planned not only looking at the current situation, but also considering the future outlook. A logistic solution that uses wireless sensors to measure and forecast the fill-level of waste and recycling containers could combine fill-level forecasts with an extensive set of collection parameters (e.g., traffic information, vehicle information, road restrictions) in order to calculate the most cost efficient collection plan. This smart plan would be automatically generated and accessed by the driver through a tablet (Example from www.enevo.com). We can expect non-restrictive requirements of latency and throughput, whereas resource-poor equipments have to be taken into consideration. The requirements related to data to be exchanged, load of computation, storage and number of users are not critical.

Tourism

Augmented reality and social networks are the characteristics of applications that more take advantage from the cloud, that becomes also useful for mobile users sharing photos and video clips, tagging their friends in popular social networks like Twitter and Facebook. The cloud is effective also when mobile users require searching services, also using recognition techniques (using voice, images and keywords - example from www.mtrip.com/augmented-reality/. We can expect not-restrictive requirements of latency and throughput, whereas resource-poor equipments have to be taken into consideration. There are a great amount of data to be exchanged, load of computation and storage and number of users are variable.

1.7 A utility based resource optimization approach

The requirements related to the applications, and the associated QoS, can be respected by optimizing the application partitioning and node/cloud association based on the features of the processing and storage devices and of the transmission equipments introduced in section 1.4. Moreover, it should be noted that there is a correlation among the application requirements and the features of the UMCC equipments.

The latency suffered to perform a certain application can be seen as composed by the time needed for transferring the application data to the cloud computing infrastructure and the time needed for the cloud computation, therefore it is affected by the processing speed of the involved devices and by the channel capacity and the communication interfaces of the chosen transmission equipments. Furthermore, the energy spent to perform an application depends on the time needed for the data transfer, thus it depends on the channel capacity and the number of communication interfaces of the communication equipments; it has to be noticed that we have to consider also the energy spent by the user device while idles during the cloud computation, so that the processing speeds of the cloud devices are involved. The storage value of the application does not influence directly the performance of the system, but it can represent a limitation for the usability of a certain cloud infrastructure, as well as the number of potential users of the application.

A feasible optimization regarding the computing and the exchanged data requirements can be performed by operating on the complexity of the computability resources, considering also that, in partial offloading, the exchanged data could not be a predetermined value [23].

In this context, a utility function aiming to optimize the application-dependent QoS is proposed, acting as input for a procedure of partition and a node/cloud association, as shown in \ref{fig:FunctionalBlocks}. The utility function can be written as:

\begin{equation} U = \sum_{i=1}^{N_{req}}\gamma_i f_i(\xi_i) \label{eq:global-utility} \tag{1.14} \end{equation}where $N_{req}$ is the number of requirements for a certain smart city application, $\xi_i$ stands for the $i$-th application requirement among those defined in \autoref{sec:requirements}, and $\gamma_i$ is a weight value, while $f_i(\cdot)$ corresponds to a specific utility function used for evaluate the respect of the $i$-th requirement.

In Fig. 4, the functional blocks of the UMCC framework, based on a utility function optimization, are represented. On one hand, the smart city applications define specific requirements, while the cloud topologies in a certain scenario set their features. The utility function aims at selecting those cloud topologies that allow to respect the requirements by setting an optimized distribution of the application itself. The optimization of the partition and the node association will impact again on the UMCC features to be used by the other applications.

The maximization of the introduced utility function could be a nontrivial optimization problem, depending on the considered number of applications and devices acting in the selected scenario. In relation to the introduced requirements, the number of constraints could be reduced, and the complexity of the problem decreased by introducing opportunely defined sub-optimal solutions. All the utility and cost functions presented in the following chapters are particular cases of eq. \ref{eq:global-utility}. In particular, in chapter 2 a cost function including the tradeoff between energy consumption of mobile devices versus the time to offloading data and to compute tasks on a centralized remote cloud server is provided, evaluating the optimal offloading fraction depending on the network’s load; in chapter 3 and in chapter 5 we focused on a utility function representing the QoS degree perceived by the user, modeled as a sigmoid curve, that is a well-known function often used to describe QoS perception [24]; finally, in chapter 4, we focused on the computation offloading towards the distributed cloud, for offloading a real-time navigation application in a distributed fashion, aiming to minimize the execution time, since the devices are autonomous regarding the energy provision.

1.8 Conclusions

In this chapter we introduced the UMCC framework, a concept that supports the smart city vision for the optimization of the QoS of various types of smart city applications. The UMCC consists of different topologies of cloud and diverse types of RATs, that are used for offloading computation and share resources among the mobile devices. The QoS depends on the type of application, since it is affected by the defined requirements in a different way depending on the aims of the application itself. A cost function optimization approach is proposed, aiming to select the optimal partition level of the applications and the cloud infrastructures to be used for their computation. The optimization of the QoS is influenced by a big number of features, related to the choice of the distribution of data in the cloud units and the nodes used for the transmission, but the problem can be restricted considering some simplifications suggested by the aims and the domain of the application.

Part II - Partial Offloading Optimization

A UMCC framework settled on an efficient wireless network allows users to benefit from multimedia services in an ubiquitous, seamless and interoperable way. In this context MCC and HetNets are viewed as infrastructures providing together a key solution for the major facing problems: the former allows to offload application to powerful remote servers, shortening execution time and extending battery life of mobile devices, while the latter allows the use of small cells in addition to macrocells, exploiting high-speed and stable connectivity in an ever grown mobile traffic trend. In order to fulfill the computation offloading efficiently, in the following chapters we explore techniques aiming to move the computing application towards the cloud, considering a non-trivial multi-objective optimization approach that takes into consideration the tradeoff between the energy consumption of the SMDs and the time for executing the application. The aim of the optimization is to find the percentage of application offloading that minimizes the proposed cost function, in such a way that only a part of the application is transfered and computed outside, whereas the rest of the application is computed locally.

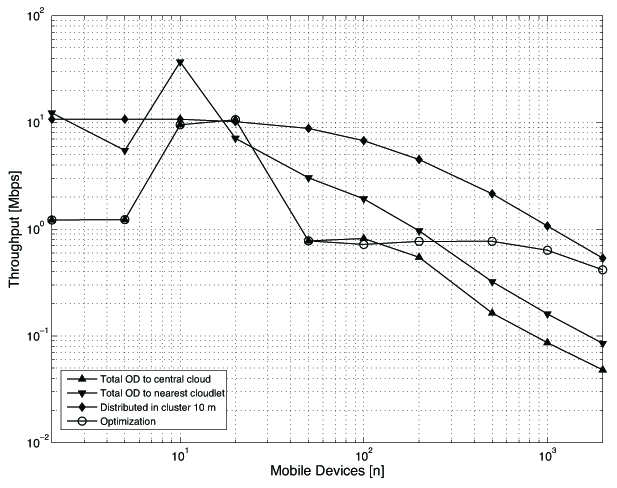

The chapter 2 deals with the partial offloading technique in a centralized cloud scenario. The results show that exists a particular offloading percentage value fitting the system in case of simultaneously high data and network workload, differently from the simple yes/no offloading decision which would move the entire application or would perform it locally. In chapter 3 the partial offloading technique is applied using a utility function model arising from the economic world. It aims to measure the QoS, in order to choose the best AP in a HetNet for running the partial computation offloading. The goal is to save energy for the SMDs and to reduce computational time. In chapter 4 the proposed UMCC framework and the partial computation offloading are applied to a vehicular environment for handling a real-time navigation application. In this case, we consider also cloudlets and distributed clouds, so that the SMDs can exploit road side units and other neighbor devices for delegating a shared application.

The content of the following chapters was extracted from publications [P3],[P4], and [P5].

A Partial Computation Offloading Technique

Introduction

Smart cities are considered a paradigm where wireless communication is an enhancing factor to make better urban services and improve the quality of life for citizens and visitors. In a smart city scenario several entities should be taken into consideration: the wireless infrastructure that allows data-exchange, the user devices, the sensing nodes, the machine devices, access points, one or more cloud infrastructures. Moreover, for delivering the requested services lots of data are exchanged among the citizens and the devices, and these data need also to be elaborated in order to give the correct information to the users. Thanks to various wireless communication technologies, users can move through different environments, indoor and outdoor, providing data to the cloud and receiving access services as browsing, video on demand, video streaming, information about location and maps. In this context, energy saving and performance improvement of the SMDs have been widely recognized as primary issues. In fact, the execution of every complex application is a big challenge due to the limited battery power and computation capacity of the mobile devices, especially in a smart environment where communication is considered a key to get better features in important areas such as mobility and transportation.

The exploitation of HetNet infrastructures together with the opportunity to delegate computation load to MCC, as shown in fig. 2.1, is an appealing connection achieving the aims of saving the SMD's power resource and executing the requested tasks in a faster way [23].

HetNets involve multiple types of low power radio access nodes in addition to the traditional macrocell nodes in a wireless network, reaching the major goal to enhance connectivity. On the other hand, MCC aims to increase the computing capabilities of mobile devices, to conserve local resources - especially battery charge - to extend storage capacity and to enhance data safety for making the computing experience of mobile users better [25] .

The distributed execution (i.e., computation/code offloading) between the cloud and mobile devices has been widely investigated [9], highlighting the challenges towards a more efficient cloud-based offloading framework and also suggesting some opportunities that may be exploited. Indeed, the joint optimization of HetNets and distributed processing is a promising research trend [5] .

Several works have already analyzed characteristics and capacity of MCC offloading, for example aiming to extract offloading friendly parts of codes from existing applications [26], [27]. Also, in [28] the key issues are identified when developing new applications which can effectively leverage cloud resources. Furthermore, in [29] a real-life scenarios, where each device is associated to a software clone on the cloud, has been considered, and in [30] a system that effectively accounts for the power usage of all of the primary hardware subsystems on the phone has been implemented, distinguishing between CPU, display, graphics, GPS, audio, and microphone.

In [31] an offloading framework, named Ternary Decision Maker (TDM), has been developed, aiming to shorten response time and reduce energy consumption simultaneously with targets of execution including on-board CPU and GPU in addition to the cloud, from the point of view of the single device. In addition, there are many studies that focus on whether to offload computation to a server, providing solutions related to a yes/no decision for the entire task at one time [32], [33] or studies that focus on optimization of the energy consumption in SMDs necessary to run a given application under execution time constraint [34].

Differently from the literature, we propose a partial offloading technique able to exploit the HetNets scenario and the presence of MCC devices - the UMCC framework - by optimizing the amount of partial offloading of the computational tasks depending on the number of devices connected to a network and their location with respect to the WiFi access points or LTE eNodeBs. The SMDs can exploit the partial data offloading to distribute high computational tasks among centralized servers and local computing.

In Fig. 2.2 the workflow and the entities involved in the performance of a task are shown. From the point of view of a single user, the decision about offloading or not is taken on the basis of the following considerations:

- If the task is delegated to the cloud, the energy consumed by the mobile device is due to the data transfer - uploading data and downloading results - plus the energy consumed in an idle state during the outside computation - waiting for the results; the global time for having the task accomplished is related not only to the computational time but also to the transfer time for moving data from the mobile device to the cloud and vice-versa.

- If the task is computed locally, the energy consumed by the mobile device is due to the computation itself; the global time for having the task accomplished is related only to the computational ability of the SMD.

In this chapter the optimization of the entire system is considered, not for a single device but for the whole community of devices, by taking into account partial offloading in a non trivial multi-objective optimization approach, where both energy consumption and execution time constraints are considered. A cost function including the tradeoff between energy consumption of mobile devices versus the time to offloading data and to compute tasks on a remote cloud server is provided, evaluating the optimal offloading fraction depending on the network's load. It can be exploited when the network is overloaded and the tasks request large amounts both of computation to perform and data to exchange.

System Model

The reference scenario is characterized by a urban area with a pervasive wireless coverage, where several mobile devices are interacting with a traditional centralized cloud and request for services from a remote data center, as illustrated in Fig. 2.1. Alongside the presence of a pervasive wireless network, the system deals with many sensing and user terminals that generate and exploit a large amount of data. In order to connect the SMDs to the cloud and the data centers for delegating data for the computation, we take into account a simple categorization of the UMCC's trasmission entities, considering only two types of RATs forming the basic elements of the HetNets: macrocells and small cells. If, on one side, the strategy of delegating computation to the centralized cloud allows to exploit high performance computing centers, on the other side, it copes with the energy spent by the SMDs for transferring data. Similarly, the SMDs which compute locally the applications in a distributed approach, face with the energy issues due to the computation itself. In other words, the SMDs consumes energy both to delegate the application to the data centers and to compute it locally. Furthermore, both the speed to transmit data and the speed for local computation are related to the energy consumed by the SMDs. Thus, the offloading decision between offloading the application or computing it locally leads to a tradeoff. For this reason our model provides a cost function by resorting to a previously introduced model in [32], [33] which compares the energy used for a 100\% offloading with the ones used to perform the task locally. The parameters used in the following are listed in tab 2.1.

| symbol | meaning | unit of measure |

|---|---|---|

| $P_l$ | power for local computing | W |

| $P_\textit{id}$ | power while being idle | W |

| $P_\textit{tr}$ | power for sending and receiving data | W |

| $S_\textit{md}$ | SMD’s calculation speed | no. of instructions / s |

| $S_\textit{tr}$ | SMD’s transmission speed | bit / s |

| $S_\textit{cs}$ | cloud server’s calculation speed | no. of instructions / s |

| $C$ | instructions required by the task | no. of instructions |

| $D$ | exchanged data | bit |

In our scenario we suppose that the computation of a certain task requires $C$ instructions. $S_{\textit{md}}$ and $S_{\textit{cs}}$ are, respectively, the speeds in instructions per second of the mobile device and the cloud server. Hence, a certain task can be completed in an amount of time equal to $C/S_{\textit{md}}$ on the device and $C/S_{\textit{cs}}$ on the server. On the other hand, let us suppose that $D$ corresponds to the amount of bits of data that the device and the server must exchange for the remote computation, and $S_{\textit{tr}}$ is the transmission speed, in bit per second between the SMD and the access point; hence, the transmission of data lasts an amount of time equal to $D/S_{\textit{tr}}$. In this case we consider that the transmission time is mostly due to the access network transfer, because the transfer rate of the backbone network can be considered as negligible due to the higher data rate. Moreover, we consider as negligible the transfer time from the access point to the user terminal because the amount of data in response to the elaboration in centralized server is little with respect to the data sent to the centralized server [32], [33].

Hence, it is possible to derive the energy for local computing:

\begin{equation} \label{eq:E_locale} E_{l} = P_{l}\times\frac{C}{S_{\textit{md}}} \tag{2.1} \end{equation}as the product of the power consumption of the mobile device for computing locally, $P_{l}$, and the time $C/S_{\textit{md}}$ needed for the computation. Similarly, it is possible to derive the energy needed for performing the task computation on the cloud as the energy used while being in idle for the remote computation plus the energy used to transmit the whole data from the SMD to the cloud:

\begin{equation} \label{eq:E_OD100} E_{\textit{od}} = P_{\textit{id}}\times\frac{C}{S_{\textit{cs}}} + P_{\textit{tr}}\times\frac{D}{S_{\textit{tr}}}, \tag{2.2} \end{equation}where $P_{\textit{id}}$ and $P_{\textit{tr}}$ are the power consumptions of the mobile device, in watts, during idle and data transmission periods, respectively.

Similarly, it is possible to derive the time needed for the local computing as:

\begin{equation} \label{eq:T_locale} T_{l} = \frac{C}{S_{\textit{md}}} \tag{2.3} \end{equation}and the time for the whole offloading computing as

\begin{equation} \label{eq:T_OD100} T_{\textit{od}} = \frac{C}{S_{\textit{cs}}} + \frac{D}{S_{\textit{tr}}} \tag{2.4} \end{equation}In many applications, this approach is not efficient or feasible, and it is necessary to partition the application at a finer granularity into local and remote parts, which is a key step for offloading.

Adaptive Offloading

In this section, firstly, two equations are provided, to represent the energy used by a SMD to execute an application in partial offloading and to express the time needed to execute such application. Secondly, the impact of the traffic workload in the wireless network is taken into account, since the RATs and the number of SMDs entails the transmission speed of the the offloading data. Thirdly, a cost function is introduced, to evaluate the percentage of offloading which minimizes both energy and time.

In order to analyze the energy spent for offloading only a part of the application, we introduce the weight coefficients ${\gamma}$ and ${\delta}$, satisfying ${0 \le \gamma ,\delta \le 1}$, representing respectively the percentage of the computational task and the percentage of the exchanged data for offloading. Then, the used energy of a single device ${E_{\textit{part}\_\textit{od}}}$ has been introduced, as the sum of the one spent to perform a part of the task locally plus the one spent to idle and transmit the other part of the task to the cloud:

\begin{equation} E_{\textit{part}\_\textit{od}} = P_{l}\times\frac{(1-\gamma)\cdot C}{S_{\textit{md}}} + P_{\textit{id}}\times\frac{\gamma\cdot C}{S_{\textit{cs}}} + P_{\textit{tr}}\times\frac{\delta\cdot D}{S_{\textit{tr}}} \label{eq:E_ODpart} \tag{2.5} \end{equation}Taking into account the same coefficients $\gamma$ and $\delta$ used in eq. \ref{eq:E_ODpart}, we can calculate the time for the partial offloading ${T_{\textit{part}\_\textit{od}}}$ as the maximum between the time needed for computing the local part of the task and the time needed for the offloading, considering the two phases performed in the same time:

\begin{equation} \label{eq:T_ODpart} T_{\textit{part}\_\textit{od}} = \max \left(\frac{(1-\gamma)\cdot C}{S_{\textit{md}}};\quad\frac{\gamma\cdot C}{S_{\textit{cs}}} + \frac{\delta\cdot D}{S_{\textit{tr}}}\right) \tag{2.6} \end{equation}The structure and the workload of the network are implicitly considered in eqs. \ref{eq:E_ODpart} and \ref{eq:T_ODpart}. Now we are going to describe the effect on ${E_{\textit{part}\_\textit{od}}}$ and ${T_{\textit{part}\_\textit{od}}}$ due to the different RATs performing in the HetNets and to the amount of devices connected to this different RATs. The HetNet mainly consists of two components, macrocells and small cells, with different bandwidths ${\textit{BW}}$. Since, for a single SMD, the speed rate of the data exchange ${S_{\textit{tr}}}$ is affected by the bandwidth of the node to which the SMD is connected, by the distance ${d}$ from this node, and by the number ${n}$ of the overall SMDs connected to the same node, ${S_{tr}}$ can be written in an explicit way as:

\begin{equation} \label{eq:Str} S_{tr} = \frac{\textit{BW}}{n}\cdot \log_2{\left(1+\frac{\textit{SNR}}{d^2}\right)} \tag{2.7} \end{equation}where $\textit{SNR}$ is the SNR, typical parameter of the device.

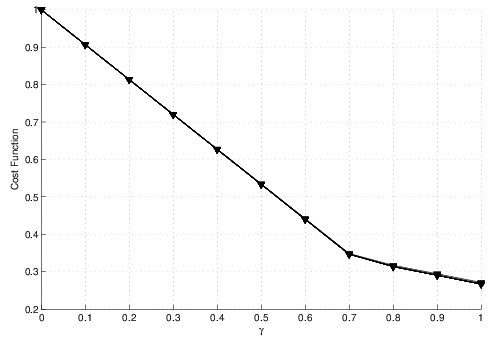

In order to allow the evaluation of the offloading percentage, aiming to save energy and improve performance, the introduction of a cost function that can consider the minimization of both eqs. \ref{eq:E_ODpart} and \ref{eq:T_ODpart} for the entire set of SMDs is required. This is a non-trivial multi-objective optimization problem that we addressed by setting the cost function as a weighted sum of both the average values, with $\alpha$ and $\beta$ coefficients with the constraints $0\le\alpha,\beta\le1$ and $\alpha+\beta=1$, $N$ number of network's devices and ${E_l}$, ${T_l}$ reference values representing average energy and time spent when the task is computed locally by a SMD:

\begin{equation} \label{eq:FCost} F = \alpha \frac{\frac{1}{N}\sum_{k=1}^N E_{\textit{part}\_\textit{od},k}(\gamma,\delta)}{E_l} + \beta \frac{\frac{1}{N}\sum_{k=1}^N T_{\textit{part}\_\textit{od},k}(\gamma,\delta)}{T_l} \tag{2.8} \end{equation}This cost function is based on a network centric approach in which a central entity is responsible for choosing values of the offloading percentage $\gamma$ and $\delta$ after collecting informations about the SMDs' features. Furthermore, in the partial offloading procedure, $\gamma$ and $\delta$ are bounds, because before a task is executed it may require certain amount of data from other tasks [23]. Moreover, the weighted coefficients $\alpha$ and $\beta$ are chosen at a main level to give a major importance to energy or time saving.

Numerical Results

During a partial offloading the amount of energy and time in eqs. \ref{eq:E_ODpart} and \ref{eq:T_ODpart} is affected by the percentage of computation and communication exchanged, represented respectively by the coefficients $\gamma$ and $\delta$. These are correlated each other, since the execution of a remote computation task requires a certain amount of input/output data to be exchanged. So we can consider the ratio $\delta/\gamma$ as a typical value, peculiar of a type of application. To summarize typical scenarios we have taken into account three kinds of applications represented in Table 2.2, according to the aims to analyze cases of a smart transportation system [35]

| Application | Computation | Data Transmission | C | D | $\delta/\gamma$ |

|---|---|---|---|---|---|

| 1 - Real time traffic analysis | High | Low | $10^7$ | $10^5$ | $0.25$ |

| 2 - Mobile video and audio communication | Low | High | $10^5$ | $10^7$ | $0.75$ |

| 3 - Mobile Social Networking | High | High | $10^7$ | $10^7$ | $0.50$ |

We considered a deployment area of $1000\times1000\ m^2$, where one LTE eNodeB with channel capacity equal to 100 MHz and three WiFi access point with channel capacities equal to 22 MHz are positioned to cover the entire area. Specifically, the access points are positioned at point (0,0), (500,1000) and (0,1000), and the LTE station at (500,500), as shown in fig. 2.3.

The values of $S_{\textit{md}}$, $P_{\textit{id}}$, $P_{\textit{tr}}$ and $P_l$ are specific parameters of the mobile device. For example we utilized the values of an HP iPAQ PDA with a 400 MHz Intel XScale processor ($S_{\textit{md}}=400$) and the following values: $P_l\approx0.9 W$, $P_{\textit{id}}\approx0.3 W$ and $P_{\textit{tr}}\approx1.3 W$ [32].

The cost function coefficients, $\alpha$ and $\beta$, are equal to 0.5, aiming to give the same importance to both time and energy consumptions. In Figures 2.4, 2.5, and 2.6 the performance results of the cost function are represented for the three applications described in tab 2.2.

Fig. 2.4 shows that, when a task requires high computation and low communication, i.e. Application 1, it is better to offload the task totally, no matter how many devices are connected to the network. In fact, the curves are overlapped and the cost function assumes the sames values for the same percentage $\gamma$.

On the other hands, Fig. 2.5 shows that, when a task requires low computation and high communication, i.e. Application 2, it is better to compute the task locally. In this case a big number of connected devices affects the cost function in a negative way; it is possible to see that there is a minimum for $\gamma$ = 0.

The most interesting case is shown in Fig. 2.6. In fact, when the network is overloaded, tasks with both a large amount of computation to execute and data to exchange - as in Application 3 - are better performed for a specific value of $\gamma$. For example, in this case, the best performance is for $\gamma = 0.4$ when a population of 5000 devices is whithin the area, and $\gamma= 0.7$ for a population of 2000 devices. When the network is not overloaded, instead, as in cases of less devices within the area, it's better to perform the total offloading.

Finally, as shown in \autoref{fig:enApp3adapt} and \autoref{fig:timeApp3adapt}, we compare energy and time spent in the adaptive case with those spent for the local execution and the total offloading case to perform Application 3; for the adaptive algorithm we have considered the use of the optimized $\gamma$ parameter following the previous analysis. While for the energy there is a compromise between the two boundary cases, the adaptive function allows the best performance considering time as the primary issue.

Conclusions

In this chapter, a cost function has been defined for optimizing contemporaneously time and energy consumption in a scenario where smart mobile devices are supposed to perform an application; the aim was to optimize the amount of computation performed locally and remotely. The remote execution is faster and can relieve mobile devices from the correlated energy consumption, but it involves data exchange with the cloud server, spending time and energy to transmit, depending also from the load of the HetNet. The cost function is proposed to evaluate the percentage of application to offload for time and energy optimization. The results show that for applications requesting both high execution work and data exchange a particular value of this percentage, depending on the number of devices, optimize the performance.

A User-Satisfaction Based Offloading Technique for Smart City Applications

Introduction

New user's needs cause a major boost of wireless communication techniques employed in smart cities, as well as a rapid growth and diffusion of enhancing technologies. To achieve the goal of interacting with city services, allowing to simplify everyday life, MCC and HetNets become together the killer applications for resolving the significant facing problems: the former for offloading application to powerful remote servers, shortening execution time and extending battery life of mobile devices, the latter for exploiting high-speed and stable connectivity in an ever grown mobile traffic trend, allowing the use of small cells in addition to macrocells [23]. In such a scenario users can access to remote resources without interruption in time and space.